Future Shock ∞.0

In the Beginning

“In 1970, change was fast but still comprehensible. With AI, we're facing change that may exceed human comprehension entirely. Not just faster change but change that transforms the nature of change itself.”

The first time I remember learning about Alvin Toffler’s 1970 landmark book Future Shock, was in a short documentary we watched in Junior High Social Studies class, 1976. Ominously narrated by Orson Welles, it freaked out about a third of the class, humored another third, and bored the remaining third. I was freaked but fascinated at the same time, which morphed into an interest in the absurdist notion that all technological advancements mean “progress” and are “good.” Think Slim Pickens riding the bomb down at the end of Dr. Strangelove or the end of Kurt Vonnegut’s Cat’s Cradle.

To counter the ominous documentary, we also watched a short film that stated the coming proliferation of computers would allow the working class to finish their jobs in half the time, thus vastly increasing “leisure” time. Swing and a miss.

Future Shock (too much change in too short of time) was actually the first in a trilogy that also included The Third Wave, 1980 (approaching an era of decentralization, information), and Power Shift, 1990 (knowledge, information, intelligence, as the new power vs monetary or asset wealth). In the best-case scenario, this would lead to more equality and better democratic societies – IF – knowledge, information, intelligence, et al, remained decentralized, accurate, and free.

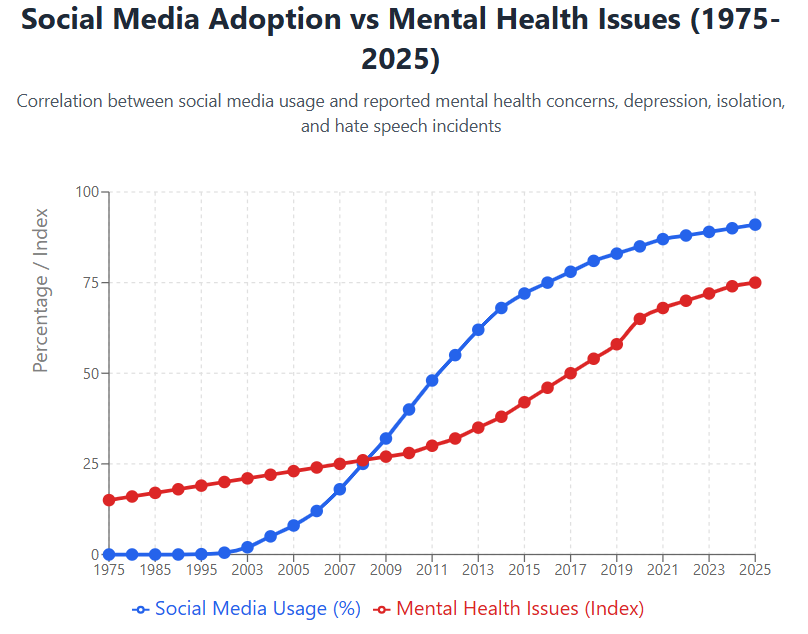

A complicating key element that also factors in here is the dehumanization of the means of communication brought on by the explosion of “Social” Media, another form of “Shock” to the human system. That, coupled now with the potentially devastating advent of artificial intelligence, is bringing together the full realization of the core warnings of the original book: information overload, institutional lag, and identity fragmentation, leading to potentially complete psychological and societal disorientation, all hastened by foundational erosion.

Foundational Erosions

Societal Erosion:

The Social Media revolution has accelerated the erosion of the very societal foundations that would be needed to help assimilate the exponentially faster and larger wave of AI technology beginning to egulf us. As illustrated below, there has developed a synchronous relationship between social media adoption and mental health issues (depression, isolation, resulting tribalism, and ultimately hatred) all warned of in Future Shock.

Environmental Erosion:

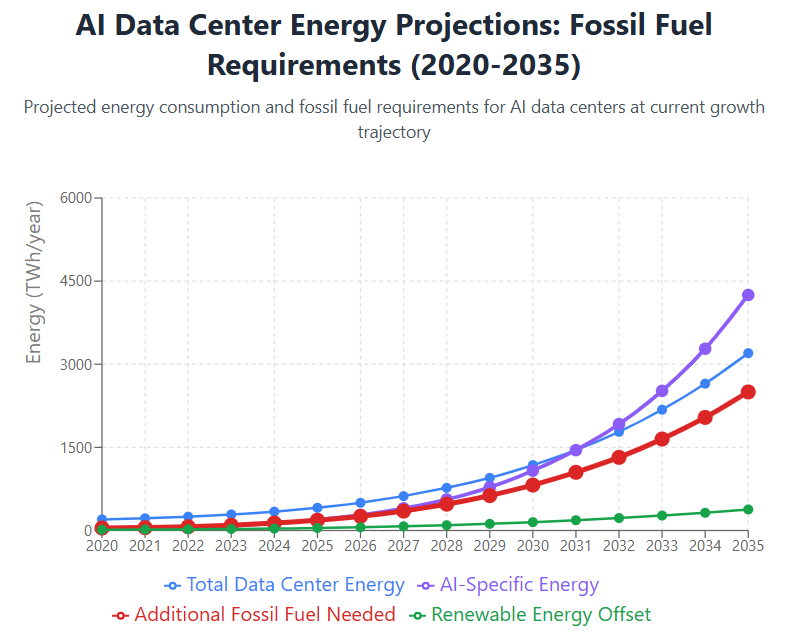

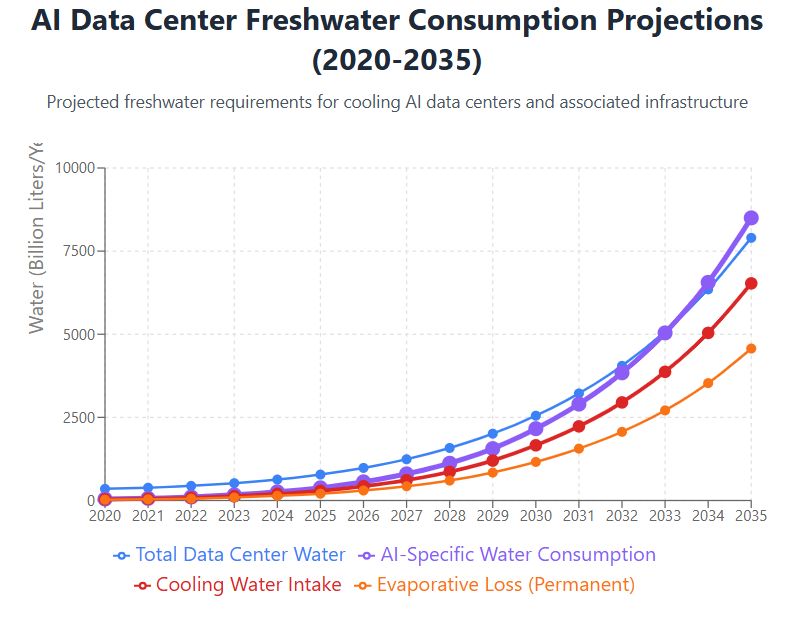

Negative changes to the Earth's health can lead to population health and economic degradation and displacement through water insecurity, climate change, and other environmental factors. If accelerated, this will only exacerbate the worst-case scenarios posited in Future Shock. The estimated impact of AI, as illustrated in the following charts, show AI to protentially provide the perfect storm accelerant.

Alarming Projections

- 2035: 2,500 TWh - equivalent to entire countries

- Coal/Gas plants: ~500 new large plants needed by 2035

- Growth rate: 35-40% annually for AI-specific demand

Key Drivers

- Large Language Models (ChatGPT, Copilot, Gemini, Claude, etc.)

- Training massive AI models requires enormous compute

- Planned hyperscale AI data centers by tech giants

Context & Scale

By 2035, AI could require fossil fuel energy equivalent to the entire electricity consumption of India (2023 levels)

Critical Assumptions

This projection assumes current AI growth trajectories continue with existing energy efficiency improvements. It factors in currently planned data center expansions by major tech companies and tyical grid energy mix ratios. Breakthrough efficiency gains or renewable energy deployment could alter these projections - a big IF.

Critical Water Projections

- 2030: 2.16 trillion liters annually for AI alone

- 2035: 8.5 trillion liters - massive strain on supplies

- Evaporative loss: 4.57 trillion liters lost forever by 2035

- Growth rate: 30-35% annually for AI water needs

Water Usage Elements

- Cooling systems: Evaporative cooling towers

- Server cooling: Direct liquid cooling systems

- Air conditioning: HVAC water consumption

- Backup systems: Redundant cooling infrastructure

Currently Projected 2035 AI Water Use Comparisons for AI

8.5 trillion liters annually equals:

- Water consumption of 50+ million people (entirety of South Korea)

- 3.4 million Olympic swimming pools

- Annual flow of the Colorado River

- Water needs of 15 million acres of farmland

Critical Assumptions

Projections assume current cooling technologies and efficiency improvements. Based on typical data center water usage of 1.8-3.0 liters per kWh for cooling systems. This includes both direct consumption and evaporative losses. Breakthrough cooling technologies could significantly reduce these requirements, but the current trajectory suggests a major freshwater crisis without intervention.

Economic Erosion:

We are already seeing the erosion with employment. Salesforce alone has fired over 4,000 people due to AI, and Microsoft has fired more than 15,000. Companies are jumping on the AI bandwagon and quickly losing human empathy for their employees at the highest levels. In a classic Let them eat cake move, many dismissively claim those impacted will simply obtain new jobs or new skills, as if it were a simple snap of a finger and thumb.

With AI an estimated 425 million people will need reskilling by 2035:

- Current retraining systems cannot handle this scale

- Average reskilling takes 2-4 years for complex roles

- Skills become obsolete faster than people can retrain

- A massive investment will be needed in education infrastructure

- Current social safety nets are inadequate for this transition period

Socioeconomic Risk Factors

- Social unrest: Mass unemployment could trigger political instability and threaten democratic countries and institutions

- Inequality explosion: Concentrated AI ownership and control vs. displaced workers

- Generational divide: Older workers least adaptable to change

- Geographic concentration: Already affluent regions will become richer while already fragile regions and economies sink into depression

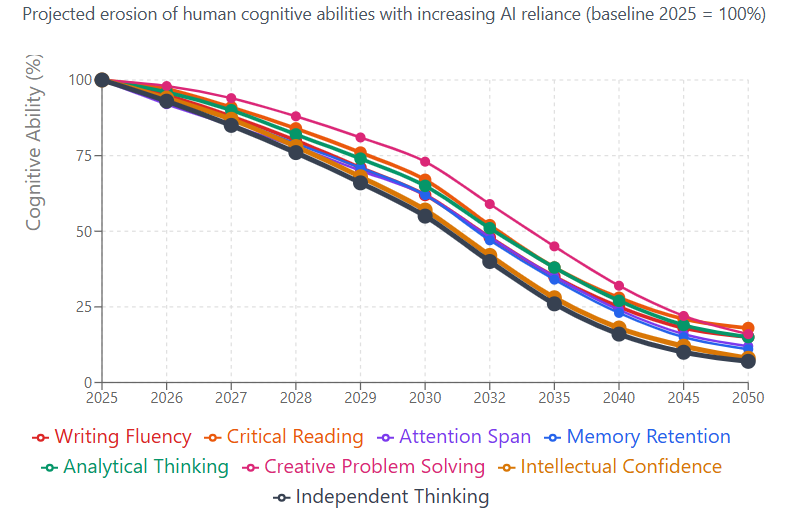

Cognitive Erosion:

We know what happens to muscles when we do not routinely work them out. But what about the brain? Some would suggest that early research shows similar results, while others argue that AI will allow the brain to think at a higher level. The standard “calculators allowed the completion of higher math” argument. In this case, however, we are talking about the replacement of intelligence itself. When AI reads, writes and thinks for us, common sense would suggest the eventual erasure of human intelligence itself.

Skill Atrophy:

- Writing fluency decline: Reduced practice leads to deterioration in sentence construction, vocabulary retrieval, and argument development

- Critical reading degradation: Weakened ability to analyze complex texts, identify logical fallacies, and synthesize multiple sources

- Attention span reduction: AI-assisted tasks require less sustained focus, potentially shortening deep concentration abilities

- Memory externalization: Increased reliance on AI for information retrieval reduces working memory exercise

Neural Pathway Changes:

- Brain regions associated with language production (Broca's area) show reduced activation

- Reading comprehension networks become less efficient without regular challenge

- Executive function areas responsible for planning and organizing thoughts may weaken

Potential Longer-term Impacts (5-15 years):

- Shallow vs. deep processing: Humans may develop preference for AI-mediated quick answers over deep analytical thinking

- Pattern recognition changes: Less practice identifying subtle rhetorical strategies, bias, or logical structures in text

- Creative thinking impacts: Writing is thinking made visible - outsourcing it may reduce ideational fluency and conceptual flexibility

- Reduced self-awareness of one's own thinking processes

- Diminished error detection - less ability to spot mistakes in reasoning or argumentation

- Weakened intellectual confidence - uncertainty about one's own analytical capabilities

Potential Generational Effects (15+ years):

- Children who grow up with AI writing/reading assistance may never fully develop needed cognitive muscles

- Different cognitive strengths may emerge, but fundamental analytical capabilities could be permanently reduced

- Reduced ability to engage with complex historical texts or nuanced philosophical arguments

- Potential loss of cultural literacy requiring deep reading skills

- Risk of creating a dangerous "cognitive class or colonial governance structure" between AI-trained and traditionally trained populations

Potential Cognitive Decline from AI Dependency (2025-2050)

Shocks to the Systems:

- Decision paralysis: "Should I learn this skill, or will AI replace it?"

- Existential anxiety: "What's my purpose if AI can do my job?"

- Information overload: 24x7 news about new AI capabilities

- Reactionary nostalgia for simplicity: Longing for pre-AI certainties

- Social fragmentation: AI dividing people into "adopters" vs. "resisters"

- Professional Identity Crisis: Skills becoming obsolete faster than people can retrain, entire professions potentially disappearing within years

- AI dependence anxiety

- Uncertainty about when to trust AI vs. human judgment

- Loss of confidence in one's own cognitive abilities

- Questions about authentic human connection

- Children forming bonds with AI that may be deeper than human connections

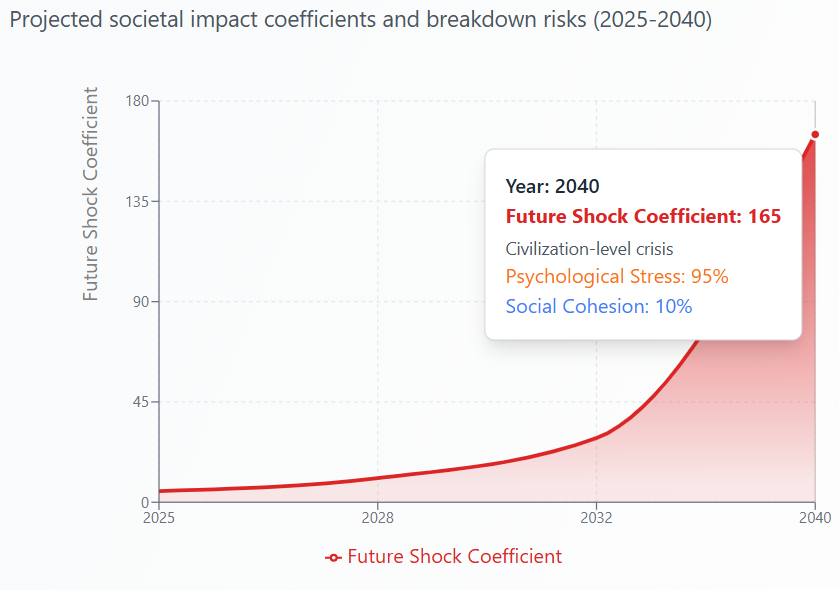

Toffler's Future Shock Framework Applied to AI

- Coefficient 1-2: Manageable stress, normal adaptation

- Coefficient 2-5: Increased anxiety, some disorientation

- Coefficient 5-10: Widespread psychological distress

- Coefficient 10-25: Social institutions begin failing

- Coefficient 25-50: Breakdown of adaptive mechanisms

- Coefficient 50+: Civilizational crisis, potential collapse

Critical AI Future Shock Thresholds

Timeline Key: Critical Future Shock Thresholds

- 2025: Coefficient = 5.0 - Disorientation begins

- 2028: Coefficient = 10.8 - Major psychological stress begins

- 2032: Coefficient = 28.8 - Social breakdown risks

- 2040: Coefficient = 165.0 - Civilization-level crisis

Mathematical Framework

- Future Shock Coefficient = Rate of Change ÷ Human Adaptive Capacity

- Rate of Change includes: AI capability advancement, job displacement speed, technology adoption rates, social disruption frequency

- Human Adaptive Capacity includes: Learning speed, psychological resilience, institutional response time, social support systems

And, In the End?

There is no end of history; each generation must assert its will and imagination as new threats require us to retry the case in every age.

Shoshana Zuboff – The Age of Surveillance Capitalism

If, as the data may suggest that humanity reaches the civilization-level crisis stage of Future Shock within 15 short years, does Democracy survive? Does capitalism survive? More critically, does the planet survive? As AI capabilities increase, is control over AI and in turn, information (power), decentralized, democratized? The current trend indicates just the opposite. AI tech is largely consolidating to a handful of companies that are not only controlling more wealth than ever before, but they are also beginning to dictate the direction of AI development and formulate what level of governance is acceptable to them. Organizations are taking note. Amnesty International has gone so far as declaring a Human Rights crisis, due to the concentration of power in Big Tech.

So, what can we do? There are numerous suggestions I’ve heard over the past year like creating AI-free spaces and times for purely human interaction, diligently preserving human-only activities and traditions (including writing, reading, critical thinking), and maintaining institutions that anchor human identity (including theater, concerts, galleries, nature, sporting events).

The first imperative step to avoid an existential Future Shock-like crisis is to RESIST acceptance. Acceptance that all tech innovation is positive, acceptance that big tech’s definition of AI is the only useful one, acceptance that power and knowledge consolidation is harmless, acceptance that privacy abdication for convenience and AI training is necessary…. The window of opportunity is closing. Resistance must start today.

Sources:

Overall -

- Current industry energy consumption reports from data center operators

- AI growth projections from major tech companies' expansion plans

- Energy sector analysis of data center power requirements

- Grid energy mix data showing fossil fuel vs renewable percentages

- Academic studies on AI computational energy demands

- Industrial water consumption reports from hyperscale data centers

- AI training and inference power consumption estimates

- Evaporative cooling system efficiency data

- Regional water stress assessments from environmental agencies

- Labor automation studies from economics research institutions

- AI capability progression research from AI labs

- Job displacement surveys from various industries

- Employment data from Bureau of Labor Statistics

- Technology adoption timeline studies

- Retraining and workforce transition research

- Historical social media user adoption data from platform companies

- Mental health trend surveys and clinical studies

- Longitudinal psychology research on digital technology impacts

- Population health surveys from health organizations

- Academic research on correlation between social media use and mental health indicators

Companies, Institutions, and Journals -

- International Energy Agency (IEA)

- Microsoft

- Meta

- Amazon AWS

- McKinsey Global Institute

- Oxford Economics

- MIT

- Amnesty International

- Organization for Economic Co-operation and Development

- Cyberpsychology

- Behavior

- Social Networking

- World Economic Forum's "Future of Jobs" reports

- MIT Brain Study (2025) - "Your Brain on ChatGPT"

- Multidisciplinary Digital Publishing Institute

- UK Study on Critical Thinking (2025)

- Microsoft Research on Knowledge Workers

- PsychUniverse

- International Journal of Educational Technology in Higher Education

- Smart Learning Environments

- Polytechnique Insights

- AI-chatbot-induced cognitive atrophy (AICICA) From tools to threats: a reflection on the impact of artificial-intelligence chatbots on cognitive health

- Generative AI: the risk of cognitive atrophy

AI Use Transparency Statement:

100% of this text was written by a human. AI was used partially in research and in design of the charts.

Sales 3.0 Labs

Through research and education, we strive to elevate ethics and transparency in both AI and the Sales Industry. Our pledge to you: we will continually provide unbiased information to help you cut through the current fog of self-proclaimed AI "experts" and hucksters.

Responses